How much should I spend testing new ads or audiences?

March 31th 2022

Testing too much or too little can destroy a campaigns performance.

Luckily, if you match your spending with the chance that each option performs the best, you can find a near-optimal balance.

There is a tension between learning and using. If we spend too much testing new ideas we won't have enough budget left for the best performers. However, the less we test, the less likely we are to find the best performers.

With performance differences between content often reaching hundreds of percent, either testing too much or too little can destroy a campaign's performance.

Balancing earning and learning

Trying to test the ideal amount is sometimes called finding the "learn/earn" balance, or if you are feeling a bit more aggressive the "explore/exploit" balance. We need to learn enough to make informed choices. However, we need time and money left to take advantage of what we have learned.

We need to find this balance almost anywhere we don't know the best option from the start. If you have ever tried to find the best restaurant, hire the best team member, or find the best performing ad, you have faced this problem before.

A technique that usually works

Across all the simulations and real-life tests that we have done, one of the best performing approaches is "randomized probability matching."

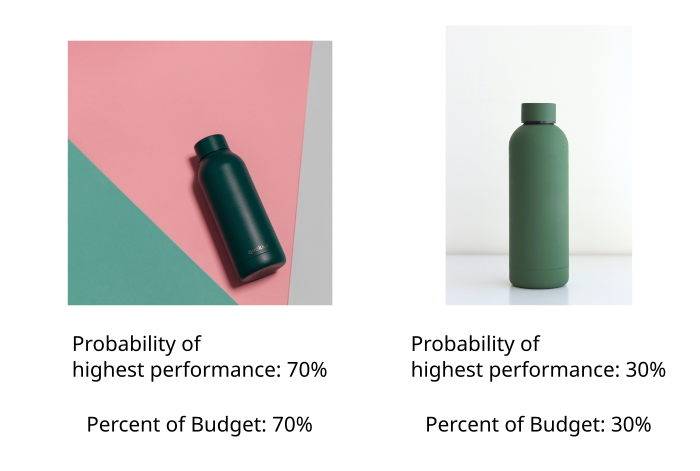

The general idea is to match how much you spend with how much you know. As an example, let's say we have two images. We have run a small initial test (Deciding Data usually starts with $40 for each new variant). This test tells us that one image has a 70% chance of outperforming the other.

This technique to find the earn / learn balance says we should spend 70% of our budget on the image with a 70% chance of being the best. This way, there is a good chance we are putting most of our money on the best performer. However, we could be wrong, so we hedge a little. In fact, we hedge by exactly the chance that we could be wrong. :)

This approach helps our customers quickly dedicate resources to clear winners and continue to test when performance is close.

We built our system to make this easy

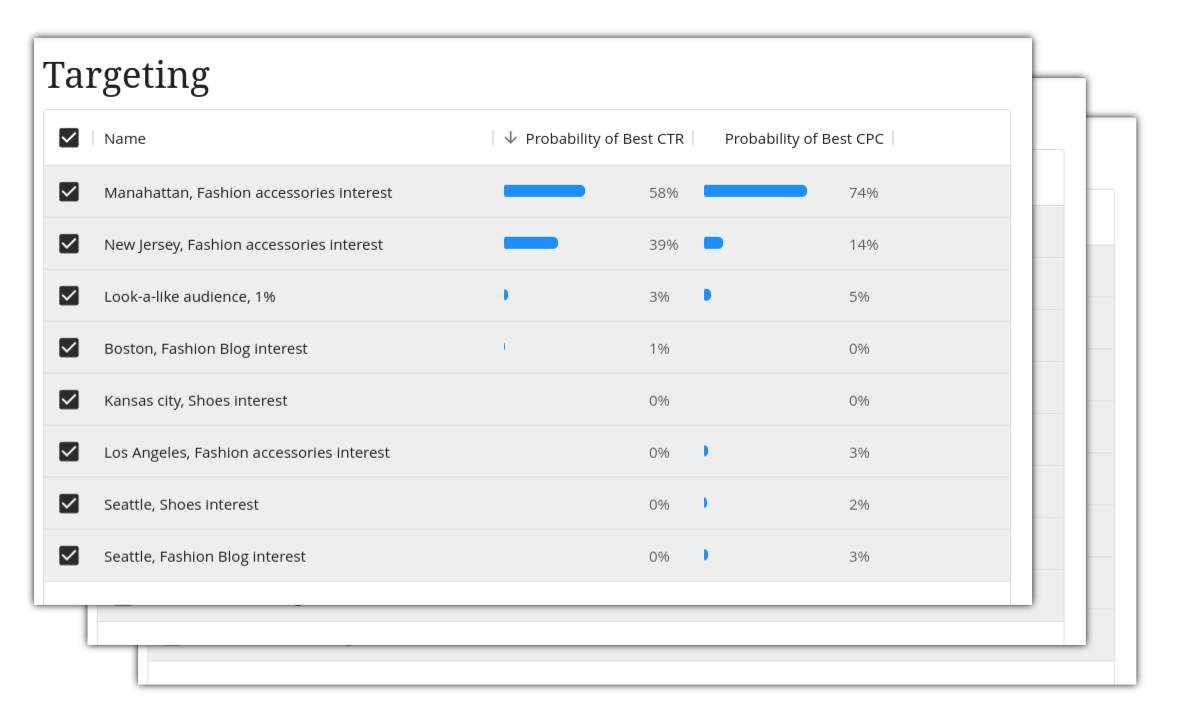

The explore/exploit trade-off is so fundamental to running high-performing ad campaigns, that we have built our system to make finding the right balance easy. Our system reports the probability that a particular option is the best performing one. You can use this information to understand, and easily budget.

Our system combines all the audiences, images, or titles you have tested into one big ranking for each type. It also lets you interactively select particular components (for example the ones that include a particular product) and it immediately puts together the probabilities that each option is the best in that sub-group.

As you run more experiments it will automatically update the probabilities.

Conclusion

When running ads, we have to balance trying new ideas with running our best performers. Getting the balance right is one of the biggest factors in a campaign's overall performance.

One great way to quickly find the optimum balance is to match your spending with your knowledge, using "randomized probability matching." Our testing system makes this easy by calculating the probability that each option performs the best for you.